Thank you. It was this.

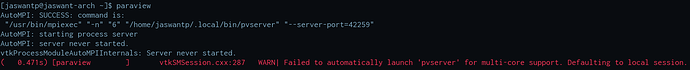

I still get the same error from ParaView with AutoMPI. However, no issues manually starting up pvserver.

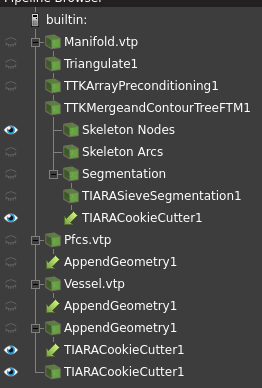

There are even more issues running a full pipeline that is part-composite and part-homogenous in this way, especially with third-party plugins. For starters, these are the errors I get. I guess it’s going to be a (fun) long ride debugging it now. This pipeline is a mix of TTK and some custom filters.

Waiting for client...

Connection URL: cs://jaswant-arch:11111

Accepting connection(s): jaswant-arch:11111

Client connected.

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░▒░░░▒▒░░░▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░ ░░░░░░░░░░░▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ░░░░░░░▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒▒▒▒▒▒▒▒▒▒▒ ░▒▒ ░░▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒▒ ░░▒ ░░▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒▒ ░░░░▒ ░░▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▄▓██▓█████▓▄▄░░░▒ ░░░░░░░░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▓▓▓▀▀▀▀▀▀▀████▓▓▓▓▄░░ ░░░░░▒ ░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒ ░░░▀██▓▓▓▓▓░░ ░░░▒ ░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒ ░▀██▓▓▓▓░ ░░░░▒ ░░░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░▒░░░▒▒░░░▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░ ░░░░░░░░░░░▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ░░░░░░░▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒▒▒▒▒▒▒▒▒▒▒ ░▒▒ ░░▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒▒ ░░▒ ░░▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒▒ ░░░░▒ ░░▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▄▓██▓█████▓▄▄░░░▒ ░░░░░░░░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▓▓▓▀▀▀▀▀▀▀████▓▓▓▓▄░░ ░░░░░▒ ░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒ ░░░▀██▓▓▓▓▓░░ ░░░▒ ░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒ ░▀██▓▓▓▓░ ░░░░▒ ░░░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒�[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░▒░░░▒▒░░░▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░ ░░░░░░░░░░░▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ░░░░░░░▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒▒▒▒▒▒▒▒▒▒▒ ░▒▒ ░░▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒▒ ░░▒ ░░▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒▒ ░░░░▒ ░░▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▄▓██▓█████▓▄▄░░░▒ ░░░░░░░░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▓▓▓▀▀▀▀▀▀▀████▓▓▓▓▄░░ ░░░░░▒ ░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒ ░░░▀██▓▓▓▓▓░░ ░░░▒ ░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░▒░░░▒▒░░░▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░ ░░░░░░░░░░░▒▒▒▒▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ░░░░░░░▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒▒▒▒▒▒▒▒▒▒▒ ░▒▒ ░░▒▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒▒ ░░▒ ░░▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒▒ ░░░░▒ ░░▒▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▒▒▒▄▓██▓█████▓▄▄░░░▒ ░░░░░░░░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒▓▓▓▀▀▀▀▀▀▀████▓▓▓▓▄░░ ░░░░░▒ ░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒▒▒▒ ░░░▀██▓▓▓▓▓░░ ░░░▒ ░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒ ░▀██▓▓▓▓░ ░░░░▒ ░░░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒�[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▒▒▒▒▒▒ ░▀██▓▓▓▓░ ░░░░▒ ░░░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒░░ ▒▒▒ ░░▀▓▓▓▓▒░ ░░░▒░░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ░▐▓▓▓▒▒ ░░░░▒ ░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░▐▓▓▒▒▒ ░░░░░▒▒ ░░▒

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░▐▓▒▒▒░░░░░░░▒▒ ░░▒

[Common] ░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░░░░▐▓▒▒▒░░░░░░░▒▒ ░░

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒░░ ▒▒▒ ░░▀▓▓▓▓▒░ ░░░▒░░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ░▐▓▓▓▒▒ ░░░░▒ ░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░▐▓▓▒▒▒ ░░░░░▒▒ ░░▒

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░▐▓▒▒▒░░░░░░░▒▒ ░░▒

[Common] ░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░░░░▐▓▒▒▒░░░░░░░▒▒ ░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░ ░░░░░░▒▒░▓▒▒▒░░░░░░░▒▒▒░░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░░░░░░░░░░ ▓▒▒░░░░░░▒▒▒▒▒░░░

[Common] ░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░░░▒░░░▓▒▒░▒░░▒▒▒▒▒▒▒░░░

[Common] ░░▒▒▒▒▒▒▒▒▒▒▒▒▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▓▒░ ▒▒▒▒▒▒▒▒▒▒▒░░▒

�▒▒▒▒▒░▒░░ ▒▒▒ ░░▀▓▓▓▓▒░ ░░░▒░░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ░▐▓▓▓▒▒ ░░░░▒ ░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░▐▓▓▒▒▒ ░░░░░▒▒ ░░▒

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░▐▓▒▒▒░░░░░░░▒▒ ░░▒

[Common] ░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░░░░▐▓▒▒▒░░░░░░░▒▒ ░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░ ░░░░░░▒▒░▓▒▒▒░░░░░░░▒▒▒░░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░░░░░░░░░░ ▓▒▒░░░░░░▒▒▒▒▒░░░

[Common] ░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░░░▒░░░▓▒▒░▒░░▒▒▒▒▒▒▒░░░

[Common] ░░▒▒▒▒▒▒▒▒▒▒▒▒▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▓▒░ ▒▒▒▒▒▒▒▒▒▒▒░░▒

[Common] ▒░░░▒▒▒▒▒▒▒▒▒▒▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░▒░░ ▒▒▒ ░░▀▓▓▓▓▒░ ░░░▒░░░▒

[Common] ▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ░▐▓▓▓▒▒ ░░░░▒ ░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░▐▓▓▒▒▒ ░░░░░▒▒ ░░▒

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░▐▓▒▒▒░░░░░░░▒▒ ░░▒

[Common] ░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ░░░░░░▐▓▒▒▒░░░░░░░▒▒ ░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░ ░░░░░░▒▒░▓▒▒▒░░░░░░░▒▒▒░░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░░░░░░░░░░ ▓▒▒░░░░░░▒▒▒▒▒░░░

[Common] ░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░░░▒░░░▓▒▒░▒░░▒▒▒▒▒▒▒░░░

[Common] ░░▒▒▒▒▒▒▒▒▒▒▒▒▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▓▒░ ▒▒▒▒▒▒▒▒▒▒▒░░▒

[Common] ▒░░░▒▒▒▒▒▒▒▒▒▒▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ▒░░░░░░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░ ░ ░▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░░░░░░░░░░░░░░░░░░░░░░░ ▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░▒░░░░░░░░░░ ▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░▒░░░░░░░░░░░░░░░░░░░░░░░░░░░░ ░▒▒▒▒▒▒▒▒░░░░

[Common] ░░▒░░░░░░░░░░░░░░░░░░░░░░░▒▒▒▒▒▒▒▒░░░░

[Common] ░░▒░░░░░░░░░░░░░░░▒▒▒▒▒▒░░░░░

[Common] ░░░░░░░░░░░░░▒░

[Common] _____ _____ _ __ __ __ ____ ___ ____ ___

[Common] |_ _|_ _| |/ / / /__\ \ |___ \ / _ \___ \ / _ \

[Common] | | | | | ' / | |/ __| | __) | | | |__) | | | |

[Common] | | | | | . \ | | (__| | / __/| |_| / __/| |_| |

[Common] |_| |_| |_|\_\ | |\___| | |_____|\___/_____|\___/

[Common] \_\ /_/

[Common] Welcome!

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░ ░░░░░░▒▒░▓▒▒▒░░░░░░░▒▒▒░░░

[Common] ░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░░░░░░░░░░ ▓▒▒░░░░░░▒▒▒▒▒░░░

[Common] ░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░░░░░▒░░░▓▒▒░▒░░▒▒▒▒▒▒▒░░░

[Common] ░░▒▒▒▒▒▒▒▒▒▒▒▒▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░ ▓▒░ ▒▒▒▒▒▒▒▒▒▒▒░░▒

[Common] ▒░░░▒▒▒▒▒▒▒▒▒▒▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ▒░░░░░░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░ ░ ░▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░░░░░░░░░░░░░░░░░░░░░░░ ▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░▒░░░░░░░░░░ ▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░▒░░░░░░░░░░░░░░░░░░░░░░░░░░░░ ░▒▒▒▒▒▒▒▒░░░░

[Commo[Common] ▒░░░▒▒▒▒▒▒▒▒▒▒▓▓▓▓▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░ ▒▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ▒░░░░░░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░ ░ ░▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░░░░░░░░░░░░░░░░░░░░░░░ ▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░▒░░░░░░░░░░ ▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░▒░░░░░░░░░░░░░░░░░░░░░░░░░░░░ ░▒▒▒▒▒▒▒▒░░░░

[Common] ░░▒░░░░░░░░░░░░░░░░░░░░░░░▒▒▒▒▒▒▒▒░░░░

[Common] ░░▒░░░░░░░░░░░░░░░▒▒▒▒▒▒░░░░░

[Common] ░░░░░░░░░░░░░▒░

[Common] _____ _____ _ __ __ __ ____ ___ ____ ___

[Common] |_ _|_ _| |/ / / /__\ \ |___ \ / _ \___ \ / _ \

[Common] | | | | | ' / | |/ __| | __) | | | |__) | | | |

[Common] | | | | | . \ | | (__| | / __/| |_| / __/| |_| |

[Common] |_| |_| |_|\_\ | |\___| | |_____|\___/_____|\___/

[Common] \_\ /_/

[Common] Welcome!

�▒▒▒▒▒░░░ ▒▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░▒ ▒▒▒▒▒▒▒▒▒▒▒░░░

[Common] ▒░░░░░░░░░░▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒▒░░░░░ ░ ░▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░░░░░░░░░░░░░░░░░░░░░░░░░░░ ▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░▒░░░░░░░░░░ ▒▒▒▒▒▒▒▒▒▒░░░

[Common] ░▒░░░░░░░░░░░░░░░░░░░░░░░░░░░░ ░▒▒▒▒▒▒▒▒░░░░

[Common] ░░▒░░░░░░░░░░░░░░░░░░░░░░░▒▒▒▒▒▒▒▒░░░░

[Common] ░░▒░░░░░░░░░░░░░░░▒▒▒▒▒▒░░░░░

[Common] ░░░░░░░░░░░░░▒░

[Common] _____ _____ _ __ __ __ ____ ___ ____ ___

[Common] |_ _|_ _| |/ / / /__\ \ |___ \ / _ \___ \ / _ \

[Common] | | | | | ' / | |/ __| | __) | | | |__) | | | |

[Common] | | | | | . \ | | (__| | / __/| |_| / __/| |_| |

[Common] |_| |_| |_|\_\ | |\___| | |_____|\___/_____|\___/

[Common] \_\ /_/

[Common] Welcome!

n] ░░▒░░░░░░░░░░░░░░░░░░░░░░░▒▒▒▒▒▒▒▒░░░░

[Common] ░░▒░░░░░░░░░░░░░░░▒▒▒▒▒▒░░░░░

[Common] ░░░░░░░░░░░░░▒░

[Common] _____ _____ _ __ __ __ ____ ___ ____ ___

[Common] |_ _|_ _| |/ / / /__\ \ |___ \ / _ \___ \ / _ \

[Common] | | | | | ' / | |/ __| | __) | | | |__) | | | |

[Common] | | | | | . \ | | (__| | / __/| |_| / __/| |_| |

[Common] |_| |_| |_|\_\ | |\___| | |_____|\___/_____|\___/

[Common] \_\ /_/

[Common] Welcome!

[ArrayPreconditioning] %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

[ArrayPreconditioning] %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

[ArrayPreconditioning] %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

[ArrayPreconditioning] Preconditioned selected scalar arrays . [0.000s|12T|100%]

[ArrayPreconditioning] Preconditioned selected scalar arrays . [0.000s|12T|100%]

[ArrayPreconditioning] Preconditioned selected scalar arrays . [0.000s|12T|100%]

[FTMTree] %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

[FTMTree] %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

[FTMTree] %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

( 160.037s) [pvserver.1 ] vtkExecutive.cxx:752 ERR| vtkPVCompositeDataPipeline (0x5620886ed3f0): Algorithm ttkFTMTree(0x562089f999e0) returned failure for request: vtkInformation (0x562088a9a200)

Debug: Off

Modified Time: 1897980

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.037s) [pvserver.2 ] vtkExecutive.cxx:752 ERR| vtkPVCompositeDataPipeline (0x55897c9d5b80): Algorithm ttkFTMTree(0x55897e2820b0) returned failure for request: vtkInformation (0x55897cd831b0)

Debug: Off

Modified Time: 1897980

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.035s) [pvserver.3 ] vtkExecutive.cxx:752 ERR| vtkPVCompositeDataPipeline (0x55cfd066ab60): Algorithm ttkFTMTree(0x55cfd1f16e00) returned failure for request: vtkInformation (0x55cfd0a17b20)

Debug: Off

Modified Time: 1897980

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.035s) [pvserver.3 ] vtkExecutive.cxx:752 ERR| vtkCompositeDataPipeline (0x55cfd0a54060): Algorithm vtkPVGeometryFilter(0x55cfd0a13ca0) returned failure for request: vtkInformation (0x55cfd0a54ea0)

Debug: Off

Modified Time: 1898060

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA_OBJECT

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.037s) [pvserver.1 ] vtkExecutive.cxx:752 ERR| vtkCompositeDataPipeline (0x562088ad6690): Algorithm vtkPVGeometryFilter(0x562088a96380) returned failure for request: vtkInformation (0x562088ad74d0)

Debug: Off

Modified Time: 1898060

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA_OBJECT

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.037s) [pvserver.2 ] vtkExecutive.cxx:752 ERR| vtkCompositeDataPipeline (0x55897cdbf6f0): Algorithm vtkPVGeometryFilter(0x55897cd7f420) returned failure for request: vtkInformation (0x55897cdc0530)

Debug: Off

Modified Time: 1898060

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA_OBJECT

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

[ArrayPreconditioning] %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

[ArrayPreconditioning] Generated order array for scalar array `Elevation'

[ArrayPreconditioning] Preconditioned selected scalar arrays . [0.172s|12T|100%]

[FTMTree] %%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

[OneSkeleton] Built 197120 edges .............................. [0.016s|1T|100%]

[ZeroSkeleton] Built 66049 vertex neighbors .................. [0.011s|12T|100%]

[FTMTree] Launching on field Elevation

[FTMTree] ======================================================================

[FTMTree] number of threads : 12

[FTMTree] * debug lvl : 3

[FTMTree] * tree type : Contour

[FTMTree] ======================================================================

[FTMTree] alloc .............................................. [0.007s|12T|100%]

[FTMTree] init ............................................... [0.489s|12T|100%]

[FTMTree] sort step .......................................... [0.027s|12T|100%]

[FTMTree] leafSearch ......................................... [0.026s|12T|100%]

[FTMtree_MT] leafGrowth JT ................................... [0.015s|12T|100%]

[FTMtree_MT] leafGrowth ST ................................... [0.019s|12T|100%]

[FTMtree_MT] trunk ST ........................................ [0.001s|12T|100%]

[FTMtree_MT] segment ST ...................................... [0.000s|12T|100%]

[FTMtree_MT] trunk JT ........................................ [0.008s|12T|100%]

[FTMtree_MT] segment JT ...................................... [0.000s|12T|100%]

[FTMTree] merge trees ....................................... [0.079s|12T|100%]

[FTMTree] combine full ....................................... [0.001s|12T|100%]

[FTMTree] build tree ......................................... [0.080s|12T|100%]

[FTMTree] Total ............................................. [0.596s|12T|100%]

( 160.947s) [pvserver.2 ] TiSieve.cpp:134 ERR| TiSieve (0x558980568440): Input is missing point data

( 160.947s) [pvserver.1 ] TiSieve.cpp:134 ERR| TiSieve (0x56208c21d120): Input is missing point data

( 160.947s) [pvserver.2 ] vtkExecutive.cxx:752 ERR| vtkPVCompositeDataPipeline (0x5589807bdd90): Algorithm TiSieve(0x558980568440) returned failure for request: vtkInformation (0x55898257b9d0)

Debug: Off

Modified Time: 1899556

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.945s) [pvserver.3 ] TiSieve.cpp:134 ERR| TiSieve (0x55cfd41f5600): Input is missing point data

( 160.945s) [pvserver.3 ] vtkExecutive.cxx:752 ERR| vtkPVCompositeDataPipeline (0x55cfd44528e0): Algorithm TiSieve(0x55cfd41f5600) returned failure for request: vtkInformation (0x55cfd6211120)

Debug: Off

Modified Time: 1899556

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.947s) [pvserver.1 ] vtkExecutive.cxx:752 ERR| vtkPVCompositeDataPipeline (0x56208c4dca20): Algorithm TiSieve(0x56208c21d120) returned failure for request: vtkInformation (0x56208e248270)

Debug: Off

Modified Time: 1899556

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.947s) [pvserver.2 ] vtkExecutive.cxx:752 ERR| vtkCompositeDataPipeline (0x558982592130): Algorithm vtkPVGeometryFilter(0x55898257c000) returned failure for request: vtkInformation (0x558982592f70)

Debug: Off

Modified Time: 1899728

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA_OBJECT

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.947s) [pvserver.1 ] vtkExecutive.cxx:752 ERR| vtkCompositeDataPipeline (0x56208e25e9d0): Algorithm vtkPVGeometryFilter(0x56208e2488a0) returned failure for request: vtkInformation (0x56208e25f810)

Debug: Off

Modified Time: 1899728

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA_OBJECT

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 160.945s) [pvserver.3 ] vtkExecutive.cxx:752 ERR| vtkCompositeDataPipeline (0x55cfd6227880): Algorithm vtkPVGeometryFilter(0x55cfd6211750) returned failure for request: vtkInformation (0x55cfd62286c0)

Debug: Off

Modified Time: 1899728

Reference Count: 1

Registered Events: (none)

Request: REQUEST_DATA_OBJECT

FROM_OUTPUT_PORT: 0

ALGORITHM_AFTER_FORWARD: 1

FORWARD_DIRECTION: 0

( 162.078s) [pvserver.0 ] vtkMPICommunicator.cxx:65 WARN| MPI had an error

------------------------------------------------

MPI_ERR_TRUNCATE: message truncated

------------------------------------------------

--------------------------------------------------------------------------

MPI_ABORT was invoked on rank 0 in communicator MPI_COMM_WORLD

with errorcode 15.

NOTE: invoking MPI_ABORT causes Open MPI to kill all MPI processes.

You may or may not see output from other processes, depending on

exactly when Open MPI kills them.

--------------------------------------------------------------------------

Loguru caught a signal: SIGTERM

Stack trace:

21 0x55cfccea355e pvserver(+0x255e) [0x55cfccea355e]

20 0x7f216df8b152 __libc_start_main + 242

19 0x55cfccea34e5 pvserver(+0x24e5) [0x55cfccea34e5]

18 0x7f216c2adbcf vtkMultiProcessController::BroadcastProcessRMIs(int, int) + 239

17 0x7f216c2ad990 vtkMultiProcessController::ProcessRMI(int, void*, int, int) + 304

16 0x7f216c95f4c5 vtkPVSessionCore::ExecuteStreamSatelliteCallback() + 181

15 0x7f216c95eef2 vtkPVSessionCore::ExecuteStreamInternal(vtkClientServerStream const&, bool) + 242

14 0x7f216c4a0b0d vtkClientServerInterpreter::ProcessStream(vtkClientServerStream const&) + 29

13 0x7f216c4a065e vtkClientServerInterpreter::ProcessOneMessage(vtkClientServerStream const&, int) + 190

12 0x7f216c4a0545 vtkClientServerInterpreter::ProcessCommandInvoke(vtkClientServerStream const&, int) + 1173

11 0x7f216cee4510 vtkPVRenderViewCommand(vtkClientServerInterpreter*, vtkObjectBase*, char const*, vtkClientServerStream const&, vtkClientServerStream&, void*) + 25248

10 0x7f216a7224da vtkPVRenderView::Update() + 426

9 0x7f216a7368d7 vtkPVView::AllReduce(unsigned long long, unsigned long long&, int, bool) + 679

8 0x7f21675c2ea0 vtkMPICommunicator::BroadcastVoidArray(void*, long long, int, int) + 176

7 0x7f21674f113b PMPI_Bcast + 283

6 0x7f215a9103ec ompi_coll_tuned_bcast_intra_dec_fixed + 252

5 0x7f216753777c ompi_coll_base_bcast_intra_binomial + 188

4 0x7f21675372e0 ompi_coll_base_bcast_intra_generic + 1264

3 0x7f21674dc496 ompi_request_default_wait + 326

2 0x7f2160cce19c opal_progress + 44

1 0x7f215aef3d19 /usr/lib/openmpi/openmpi/mca_btl_vader.so(+0x4d19) [0x7f215aef3d19]

0 0x7f216dfa06a0 /usr/lib/libc.so.6(+0x3d6a0) [0x7f216dfa06a0]

( 162.101s) [pvserver.3 ] :0 FATL| Signal: SIGTERM

Loguru caught a signal: SIGTERM

Stack trace:

30 0x55897856f55e pvserver(+0x255e) [0x55897856f55e]

29 0x7f4114e48152 __libc_start_main + 242

28 0x55897856f4e5 pvserver(+0x24e5) [0x55897856f4e5]

27 0x7f411316abcf vtkMultiProcessController::BroadcastProcessRMIs(int, int) + 239

26 0x7f411316a990 vtkMultiProcessController::ProcessRMI(int, void*, int, int) + 304

25 0x7f411381c4c5 vtkPVSessionCore::ExecuteStreamSatelliteCallback() + 181

24 0x7f411381bef2 vtkPVSessionCore::ExecuteStreamInternal(vtkClientServerStream const&, bool) + 242

23 0x7f411335db0d vtkClientServerInterpreter::ProcessStream(vtkClientServerStream const&) + 29

22 0x7f411335d65e vtkClientServerInterpreter::ProcessOneMessage(vtkClientServerStream const&, int) + 190

21 0x7f411335d545 vtkClientServerInterpreter::ProcessCommandInvoke(vtkClientServerStream const&, int) + 1173

20 0x7f4113da1510 vtkPVRenderViewCommand(vtkClientServerInterpreter*, vtkObjectBase*, char const*, vtkClientServerStream const&, vtkClientServerStream&, void*) + 25248

19 0x7f41115df3b6 vtkPVRenderView::Update() + 134

18 0x7f41115f472c vtkPVView::Update() + 268

17 0x7f41115f2b9f vtkPVView::CallProcessViewRequest(vtkInformationRequestKey*, vtkInformation*, vtkInformationVector*) + 159

16 0x7f41115a9e18 vtkPVGridAxes3DRepresentation::ProcessViewRequest(vtkInformationRequestKey*, vtkInformation*, vtkInformation*) + 40

15 0x7f41115a250f vtkPVDataRepresentation::ProcessViewRequest(vtkInformationRequestKey*, vtkInformation*, vtkInformation*) + 239

14 0x7f41130fe717 vtkStreamingDemandDrivenPipeline::Update(int, vtkInformationVector*) + 279

13 0x7f41130fd381 vtkStreamingDemandDrivenPipeline::ProcessRequest(vtkInformation*, vtkInformationVector**, vtkInformationVector*) + 881

12 0x7f41130baf4f vtkDemandDrivenPipeline::ProcessRequest(vtkInformation*, vtkInformationVector**, vtkInformationVector*) + 1343

11 0x7f41130b4f71 vtkCompositeDataPipeline::ExecuteData(vtkInformation*, vtkInformationVector**, vtkInformationVector*) + 129

10 0x7f41130b8279 vtkDemandDrivenPipeline::ExecuteData(vtkInformation*, vtkInformationVector**, vtkInformationVector*) + 57

9 0x7f41130be160 vtkExecutive::CallAlgorithm(vtkInformation*, int, vtkInformationVector**, vtkInformationVector*) + 80

8 0x7f41115aa342 vtkPVGridAxes3DRepresentation::RequestData(vtkInformation*, vtkInformationVector**, vtkInformationVector*) + 498

7 0x7f410e4810d8 vtkMPICommunicator::AllReduceVoidArray(void const*, void*, long long, int, int) + 488

6 0x7f410e3aa3d9 MPI_Allreduce + 441

5 0x7f410e3f9533 ompi_coll_base_allreduce_intra_recursivedoubling + 1059

4 0x7f410e3f8b98 ompi_coll_base_sendrecv_actual + 200

3 0x7f410e399496 ompi_request_default_wait + 326

2 0x7f4107b8b19c opal_progress + 44

1 0x7f4105ebbd19 /usr/lib/openmpi/openmpi/mca_btl_vader.so(+0x4d19) [0x7f4105ebbd19]

0 0x7f4114e5d6a0 /usr/lib/libc.so.6(+0x3d6a0) [0x7f4114e5d6a0]

( 162.103s) [pvserver.2 ] :0 FATL| Signal: SIGTERM

Loguru caught a signal: SIGTERM

Stack trace:

21 0x56208606655e pvserver(+0x255e) [0x56208606655e]

20 0x7fd96f39c152 __libc_start_main + 242

19 0x5620860664e5 pvserver(+0x24e5) [0x5620860664e5]

18 0x7fd96d6bebcf vtkMultiProcessController::BroadcastProcessRMIs(int, int) + 239

17 0x7fd96d6be990 vtkMultiProcessController::ProcessRMI(int, void*, int, int) + 304

16 0x7fd96dd704c5 vtkPVSessionCore::ExecuteStreamSatelliteCallback() + 181

15 0x7fd96dd6fef2 vtkPVSessionCore::ExecuteStreamInternal(vtkClientServerStream const&, bool) + 242

14 0x7fd96d8b1b0d vtkClientServerInterpreter::ProcessStream(vtkClientServerStream const&) + 29

13 0x7fd96d8b165e vtkClientServerInterpreter::ProcessOneMessage(vtkClientServerStream const&, int) + 190

12 0x7fd96d8b1545 vtkClientServerInterpreter::ProcessCommandInvoke(vtkClientServerStream const&, int) + 1173

11 0x7fd96e2f5510 vtkPVRenderViewCommand(vtkClientServerInterpreter*, vtkObjectBase*, char const*, vtkClientServerStream const&, vtkClientServerStream&, void*) + 25248

10 0x7fd96bb334da vtkPVRenderView::Update() + 426

9 0x7fd96bb478d7 vtkPVView::AllReduce(unsigned long long, unsigned long long&, int, bool) + 679

8 0x7fd9689d3ea0 vtkMPICommunicator::BroadcastVoidArray(void*, long long, int, int) + 176

7 0x7fd96890213b PMPI_Bcast + 283

6 0x7fd9601253ec ompi_coll_tuned_bcast_intra_dec_fixed + 252

5 0x7fd96894877c ompi_coll_base_bcast_intra_binomial + 188

4 0x7fd968948324 ompi_coll_base_bcast_intra_generic + 1332

3 0x7fd9688ed496 ompi_request_default_wait + 326

2 0x7fd9620df19c opal_progress + 44

1 0x7fd96030cc73 /usr/lib/openmpi/openmpi/mca_btl_vader.so(+0x4c73) [0x7fd96030cc73]

0 0x7fd96f3b16a0 /usr/lib/libc.so.6(+0x3d6a0) [0x7fd96f3b16a0]

( 162.103s) [pvserver.1 ] :0 FATL| Signal: SIGTERM