Paraview 5.8.1 out of the compiled binaries distributed from the Paraview website works like a breeze in the computer I installed it in.

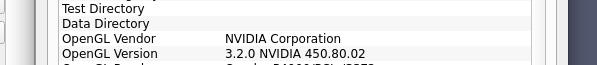

However, at start-up, Paraview launches an application VisRTX and lets it grab all GPUs in the computer as graphics devices. The screen output is:

VisRTX 0.1.6, using devices:

0: Quadro GP100 (Total: 17.1 GB, Available: 3.8 GB)

1: Quadro GP100 (Total: 17.1 GB, Available: 13.0 GB)

2: Quadro P600 (Total: 2.1 GB, Available: 2.1 GB)

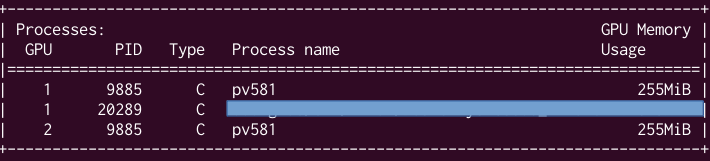

Consistenly, the CPU management interface nvidia-smi signals that the Paraview processes have taken memory in devices 0 and 1. (Device 2 is used as a graphics card for local screen rendering, I presume; I am connected to the computer in point via a network.)

For reasons of task management, I want to keep one GPU only for computing (device 1, in the example above). I have not spotted in the paraview command line (paraview --help) a command instructing ParaView about which GPU to use.

How can I launch Paraview and tell it to only use device 0?

Naive attempt, taking inspiration from https://github.com/NVIDIA/VisRTX#multi-gpu, If I launch Paraview with the augmented command

CUDA_VISIBLE_DEVICES='0' [path to paraview binaries]/paraview

the response is

VisRTX 0.1.6, using devices:

0: Quadro GP100 (Total: 17.1 GB, Available: 3.2 GB)

as wished. However, unlike in the first launch, nvidia-smi shows that the GPU did not not load any ParaView process and memory share. So I wonder whether fixing the one has spoiled the other: I can see the ParaView process working on the CPU, alas.

Take note. This post is the opposite of Select which GPU to use when starting ParaView in which the fellow poster wanted to enable a device. I want to disable one.