Hi all,

I am using Paraview 5.11.0 and MPI 4.0.3.

I have noticed a strange behavior on the distribution of regular grids.

In my example, i use the pvserver in mpi for 16 processes:

mpirun -n 16 pvserver

paraview

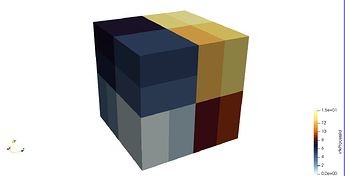

I then create a data set using the Wavelet filter. The bounds of the wavelet are: [0, 255, 0, 255, 0, 255]. This creates a cube. In the image below, I have shown the vtkProcessId field for points. What can be seen is that the distribution is not the same for all processes.

For example, for the two dark blue processes in the upper left corner, in the front, the division between the two processes is done according to the y direction, whereas for the two yellow blocks in the upper right corner, the division is done following the z direction.

Is this a bug, or is this normal? My understanding of the distribution algorithm is that the division is done following the direction for which the block has the biggest dimension. In this case, it is a cube therefore when going from 8 to 16 processes, all dimensions are of the same size (all are cubes for 8 processes). Is there a priority put in place so that when there is an equality in dimensions, some direction is chosen rather than another one?

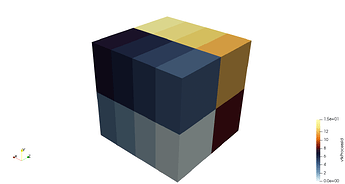

Another thing that I noticed, when using the ResampleToImage filter on the previous data set, to create a 256^3 cube (so a cube of exactly the same dimensions as before), Paraview produces the following output:

This is what I would like to always have for my application.

Is this a bug or is this normal? Is there a way to force the second distribution to always happen?

Thanks for any help.