I am running 5.10.1 egl on a remote server which has multiple GPUs

Are there any Paraview filters I can test with to verify that my remote pvserver is indeed utilising all the GPUs ?

Cheers

I am running 5.10.1 egl on a remote server which has multiple GPUs

Are there any Paraview filters I can test with to verify that my remote pvserver is indeed utilising all the GPUs ?

Cheers

Hi @Nicholas_Yue ,

A single pvserver can use only one GPU, it is usually configured using EGL env var.

To check which GPU is in use, Help->About, remote tab.

To use multiple GPU, you need a distributed pvserver with MPI, each MPI process can be configured with different EGL env var to use different GPU.

Hth,

Q1 : Can you share an example mpirun command that does what you suggested as a starting point for me to try out on my setup ?

Q2 : With multiple pvserver running on the remote/headlesss server, does each of them require their own port other than the standard 11111 ? Can I connect to the remote/headless server using a single port 11111 ?

Cheers

Q1

Probably something like that:

mpirun -bynode\

-np 10 pvserver --egl-device-index=0 \

-np 10 pvserver --egl-device-index=1

not tested though. Check you own implementation of mpi and its options.

Q2

Nothing special is needed if you run only pvserver, it will use the standard 11111 to communicate with the client. Server will internally use MPI to communicate, which you do not need to care about.

It appears that pvserver does not support the --egl-device-index option

# mpiexec pvserver --egl-device-index=0 : pvserver --egl-device-index=1

The following argument was not expected: --egl-device-index=0

Usage: /opt/ParaView-5.10.1-egl-MPI-Linux-Python3.9-x86_64/bin/pvserver-real [OPTIONS]

[General Guidelines]

Values for options can be specified either with a space (' ') or an equal-to sign ('='). Thus, '--option=value' and '--option value' are equivalent.

Multi-valued options can be specified by providing the option multiple times or separating the values by a comma (','). Thus, '--moption=foo,bar' and '--moption=foo --moption=bar' are equivalent.

Short-named options with more than one character name are no longer supported, and should simply be specified as long-named option by adding an addition '-'. Thus, use '--dr' instead of '-dr'.

Try `--help` for more more information.

This option may have changed, maybe @ben.boeckel @utkarsh.ayachit @chuckatkins @martink knows more.

I figured it out, it needs the --displays before the index specification

mpiexec pvserver --displays --egl-device-index=0 : pvserver --displays --egl-device-index=1

I can connect to the default 11111 port

Next is to try to run nvtop to monitor the GPU usage to verify that it is actually being utilized.

Thank you @mwestphal

Cheers

I’m glad you figured it out.

This surely should be documented somewhere on docs.paraview.org

It appears to be still using one GPU though

I am running nvtop to monitor

Both pvserver appears to be using device 0

Somebody with a multi GPU setup will need to go ahead and debug.

For what it is worth (not everybody will have NVIDIA IndeX enabled)…I can start a parallel job with 4 MPI tasks per node (I have 4 NVIDIA A100 per node) and IndeX correctly finds the 4 GPUs:

nvindex: 0.1 CUDA rend info : Found 4 CUDA devices.

nvindex: 0.1 CLUSTR net info : Networking is switched off.

nvindex: 1.0 INDEX main info : NVIDIA driver version: NVIDIA UNIX x86_64 Kernel Module 510.47.03 Mon Jan 24 22:58:54 UTC 2022

nvindex: 1.0 INDEX main info : CUDA runtime version: 10.2 / CUDA driver version: 11.6

nvindex: 1.0 INDEX main info : CUDA device 0: NVIDIA PG506-232, Compute 8.0, 95 GB, ECC enabled.

nvindex: 1.0 INDEX main info : CUDA device 1: NVIDIA PG506-232, Compute 8.0, 95 GB, ECC enabled.

nvindex: 1.0 INDEX main info : CUDA device 2: NVIDIA PG506-232, Compute 8.0, 95 GB, ECC enabled.

nvindex: 1.0 INDEX main info : CUDA device 3: NVIDIA PG506-232, Compute 8.0, 95 GB, ECC enabled.

I use SLURM’s option #SBATCH --gpu-bind=single:1 with ParaView v5.11-RC1

I do need more testing…The dust hasn’t settled.

Accessing multi-gpus via Nvidia IndeX will require a license from Nvidia

So it appears that the second GPU can be discovered, hopefully the default volume rendering via pvserver (without using IndeX) can pick it up somehow.

nvindex: 0.0 INDEX main info : This NVIDIA IndeX license key will expire on 2023-08-31.

nvindex: 0.0 INDEX main info : This free version of NVIDIA IndeX enables the compute power of a single GPU for scientific visualization.

nvindex: 0.0 INDEX main info : Starting the DiCE library (DiCE 2021, build 348900.100.964, 26 Aug 2021, linux-x86-64) ...

nvindex: 0.1 CUDA rend info : Found 2 CUDA devices.

nvindex: 0.1 CLUSTR net info : Networking is switched off.

nvindex: 1.0 INDEX main info : The current NVIDIA IndeX license is restricted to utilize only a single GPU / CUDA device (found 2 CUDA devices).

Testing ParaView 5.11-RC1 on a multi-gpu node, I have found it much easier to set the VTK_DEFAULT_EGL_DEVICE_INDEX environment variable based on my local SLURM_LOCALID. Using the --displays was producing an over-scheduling of GPUs. Setting the env variable was easier, and running one MPI task per GPU (i.e 4 in my case), ParaView can now use all GPUs.

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 105521 G pvserver 8937MiB |

| 1 N/A N/A 105520 G pvserver 8937MiB |

| 2 N/A N/A 105519 G pvserver 8937MiB |

| 3 N/A N/A 105518 G pvserver 8855MiB |

+-----------------------------------------------------------------------------+

Thanks to the doc at NERSC, Process and Thread Affinity - NERSC Documentation which I had to adapt somewhat for our local SLURM settings.

The single box I have do not have SLURM and I am hoping not to have to install/manage SLURM for single runs.

Here is my attempt running mpiexec with the information you shared

/opt/ParaView-5.11.0-RC1-egl-MPI-Linux-Python3.9-x86_64/bin/mpiexec -host ubuntu,ubuntu -n 1 -envlist VTK_DEFAULT_EGL_DEVICE_INDEX=0 /opt/ParaView-5.11.0-RC1-egl-MPI-Linux-Python3.9-x86_64/bin/pvserver : -n 1 -envlist VTK_DEFAULT_EGL_DEVICE_INDEX=1 /opt/ParaView-5.11.0-RC1-egl-MPI-Linux-Python3.9-x86_64/bin/pvserver

I am still seeing only GPU 0 being utilized

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 3929 G ...-x86_64/bin/pvserver-real 6MiB |

| 0 N/A N/A 3930 G ...-x86_64/bin/pvserver-real 6MiB |

+-----------------------------------------------------------------------------+

Are there additional flags I need to pass to mpiexec to facilitate GPU per task ?

Cheers

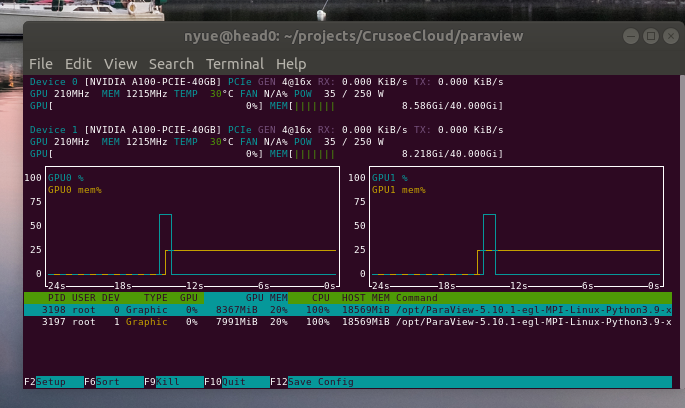

My bad, I was not supplying the environment variable in the correct way. It is working via mpiexec now

/opt/ParaView-5.10.1-egl-MPI-Linux-Python3.9-x86_64/bin/mpiexec \

-env VTK_DEFAULT_EGL_DEVICE_INDEX=0 /opt/ParaView-5.10.1-egl-MPI-Linux-Python3.9-x86_64/bin/pvserver : \

-env VTK_DEFAULT_EGL_DEVICE_INDEX=1 /opt/ParaView-5.10.1-egl-MPI-Linux-Python3.9-x86_64/bin/pvserver

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 510.73.05 Driver Version: 510.73.05 CUDA Version: 11.6 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA A100-PCI... Off | 00000000:04:00.0 Off | 0 |

| N/A 26C P0 34W / 250W | 15995MiB / 40960MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

| 1 NVIDIA A100-PCI... Off | 00000000:05:00.0 Off | 0 |

| N/A 26C P0 34W / 250W | 23557MiB / 40960MiB | 0% Default |

| | | Disabled |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 3198 G ...-x86_64/bin/pvserver-real 15995MiB |

| 1 N/A N/A 3197 G ...-x86_64/bin/pvserver-real 23557MiB |

+-----------------------------------------------------------------------------+

Thank you.