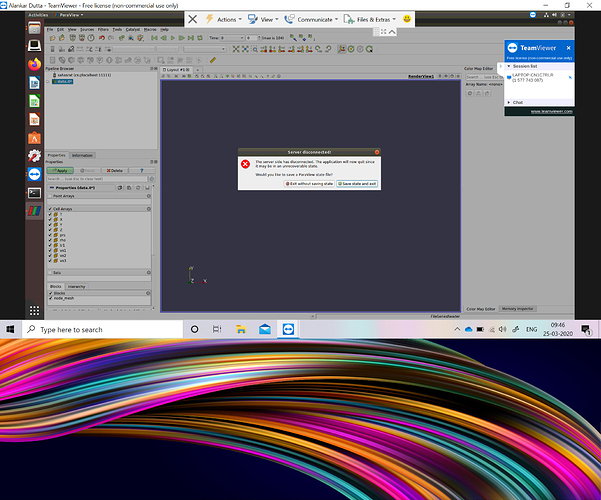

I am trying to open hdf5 files of size 14 GB each in paraview but whenever I try to load the data, Paraview is closing giving the error message ‘server side has disconnected’. I am using SahasraT and running paraview using 960 processors.

For smaller size data everything is fine. Please help me resolve this issue.

There is two possibilities here.

- This is indeed a a large size file issue and you are running out of memory server side

- There is an issue with the file in itself, not with the actual size of the file.

Monitor your memory server side and you will know.

The file is okay as I am running python scripts to analyze the files.

Can you suggest me how can I load such big data files in Paraview?

please provide an error message and check memory usage.

You are running paraview in client server mode.

How do you run the server ? Do you have access of the terminal where the server is most likely running from ?

I have Paraview compiled on server which is a Cray XC40 system. Here I’m attaching the job script that I’m submitting for running the server. Once the job is through and the server is setup, I’m connecting to it via a client on my machine through standard port forwarding with ssh. I’m facing no issues on loading smaller resolution but otherwise identical dataset.

job_pv (694 Bytes)

can you run the client from a terminal ? you should be able to see if there was error on the server before it crashes.

Has the issue given in the following link resolved till date? My problem is exactly similar to this:

https://www.paraview.org/pipermail/paraview/2015-February/033209.html

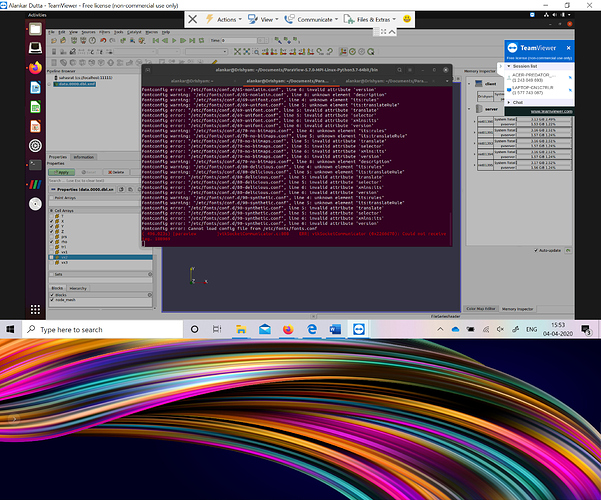

As suggested by you, I ran Paraview client from terminal and got the following error message:

( 496.023s) [paraview ]vtkSocketCommunicator.c:808 ERR| vtkSocketCommunicator (0x2266d70): Could not receive tag. 188969

I am also attaching the screenshots.

Sadly, it is not very informative.

Do you have access to the terminal running the server ?

Yes I have access to the server terminal. I am submitting a job for pvserver using the jobscript I shared in Cray XC40. Then from the client side I am using “ssh phyvijitk@sahasrat.serc.iisc.ernet.in -L 11111:nid01390:11111” to port forward the server node to the client side (I get the “nid01390:11111” info from the output dumped by the pvserver as Accepting connections). Then on the client side I connect to the pvserver using localhost:11111 from inside the Paraview gui in client mahine

Anything more from this terminal before the crash ?

Sorry for the delay in response. The job was on queue for long. Here’s what the connection info file gave on trying to load the xmf file (with companion hdf5 file around 14.8 GB).

Waiting for client…

Connection URL: cs://nid00633:11111

Accepting connection(s): nid00633:11111

Client connected.

Application 64641 exit signals: Killed

Application 64641 resources: utime ~224s, stime ~305s, Rss ~44364, inblocks ~4640160, outblocks ~0

Well, you have to do that again but monitor the server memory usage and see if it exceed what you are allowed to use.

I was going through some of the online articles couldn’t find anything useful to have a memory monitoring on Cray subsystem on which I’m submitting my Paraview jobs. Can you suggest anything useful in this regard?

I know nothing about Cray system. There is no htop ?

I’m having a similar issue where ParaView is crashing trying to open a 309GB HDF5 file, even though I have a node with 2.1TB memory. Has this issue been resolved or did someone find a way around this?

What I do is that I load one hdf5 file at a time and convert it to .pvd file unload and load the next in a batch script without the GUI. After that, the .pvd files ran without crashing on loading for visualization.