Hi all !

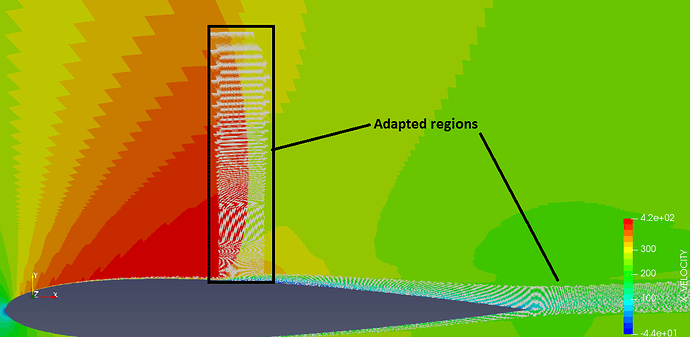

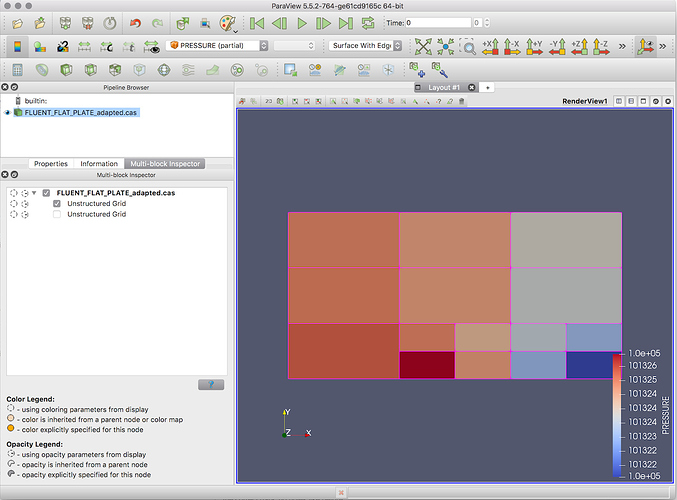

I am trying to load a “.cas” file from Fluent. This works fine for non-adapted meshes. However, when I try to load an adapted mesh I have the problem shown in the attached image.

As you can see, there are some missing data there, just in the region of the grid adaption

Moreover, the variables list shows all the classical parameters with “(partial)” legend. What does this mean?

I supposed that it is treated as a multiblock file, but after applying the MergeBlocks filter, no variable is available.

Any suggestion ?

PS1: i really need to use the “.cas” file, so it is not an option to use CGNS or other format.

PS2: it happens on PV5.4.0 and 5.5.0

Thanks in advance !

Miguel

Is there an example file that you can share to reproduce the issue? That will make it easier to figure out what’s going on in those regions.

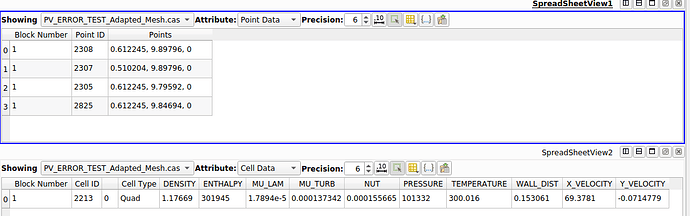

For a multiblock dataset (or any other composite dataset), variables not present on all the blocks are called “partial” arrays.

Hello Mr. Utkarsh,

I have attached a simple example of a Fluent case with and without grid adaption just to show the issue.

The grid without adaption is well read on PV 5.4.0 but the adapted one presents some missing elements on the adapted region (walls).

I also added the same adapted case on CGNS format, which is well read by PV, but unfortunatelly i can’t use it (Because I have to post-process it by using some Plugings that uses “.cas” files only)

Thanks in advance !

Miguel

PV_ERROR_TEST_Adapted_Mesh.cgns (2.2 MB)

PV_ERROR_TEST_Adapted_Mesh.cas (448.8 KB)

PV_ERROR_TEST_Adapted_Mesh.dat (841.6 KB)

PV_ERROR_TEST.cas (298.6 KB)

PV_ERROR_TEST.dat (491.3 KB)

Miguel, let me caveat by saying that I don’t know much about the FLUENT file format itself, but looking at raw code and the result, here’s what I am seeing.

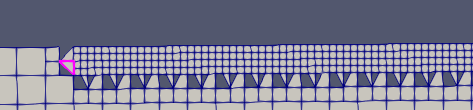

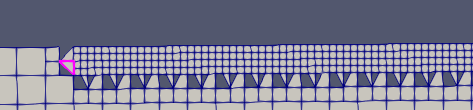

There are cells in zone 10 which look as follows:

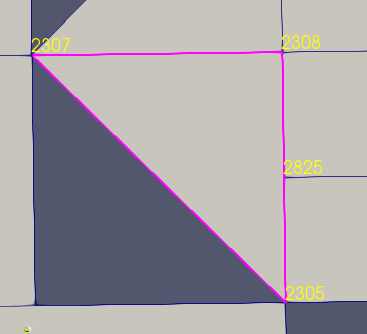

If I inspect the “traingle-like” cell closely, I see the following.

If I label using the point ids for the selected cell, I see the following:

Now the question is the cell incorrectly indicated in the file itself or is the reader misinterpreting it. Any insight you (or anyone more familiar with the format) may have would be great.

Hello Mr. Utkarsh,

Thank you very much for your help. Unfortunately, I am not familiar with the details of the « .cas » file format. However I suppose that the file is OK because I managed to read it in several Fluent versions and it works fine. It seems to be an issue with the PV reader.

Thanks again.

Miguel

Thanks for checking. Any chance you can generate a small data with may be 10-ish cells/points that still can reproduce the issue? I can then easily step through the code to see what could be wrong.

Hello Mr. Utkarsh,

I have attached a Fluent case of a flat plate with 9 cells (no daption) and 15 cells (adapted). They still reproduce the issue.

I hope this will be useful. Thanks in advance !

Miguel

FLUENT_FLAT_PLATE_adapted.dat (58.4 KB)

FLUENT_FLAT_PLATE_no_adaption.dat (34.6 KB)

FLUENT_FLAT_PLATE_no_adaption.cas (173.1 KB)

FLUENT_FLAT_PLATE_adapted.cas (174.3 KB)

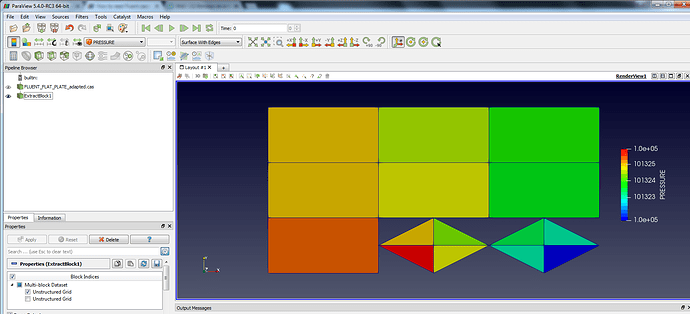

Miguel, thanks for the sample datasets. This time, here’s what I am seeing: the first block in the dataset seems like the correct data, no holes like before.

If you apply Extract Block filter to extract just the first block or use the Multiblock Inspector, you’ll see no weirdness. I’ll try to figure what makes the readers split the datasets into two blocks with the cells it picks.

Good morning,

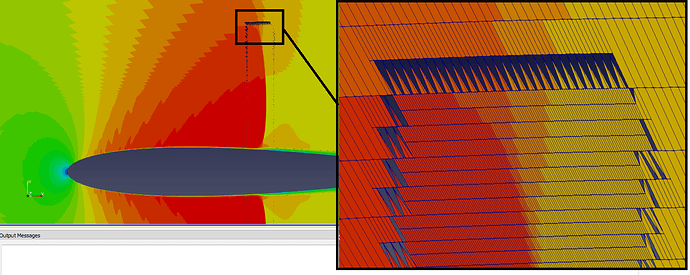

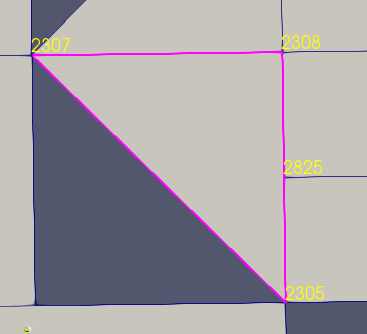

I tried the “extract block” procedure on PV5.4.0 and the error still happens even with this simple data set (See the first picture). For PV 5.5.0 it works perfectly fine as you shown previously. However, for more complex data sets like the airfoil, the extract block approach on PV 5.5.0 seems to recognize the cells on both blocks, but it fails to recognize the cells on the interface of the regions (see second picture). Since I must integrate data on the entire domain, this may lead to a little accuracy error, but it still far better than the original problem.

Thanks again.

Miguel