I am very new to Paraview. We recently started using a small CFD simulation tool and were told to use Paraview for viewing the results. Which works fine. But it always takes ages to render all the calculated time steps into pictures to later process them to a video for further engineering in the team. Is there a way I can get Paraview to make use of my many cores instead of just one? Or maybe even use my Geforce GTX 1060, which should be quite quick? I tried searching for instructions but failed because of my very rudiment knowledge on Paraview and the way it works. If someone could give me some helpful instructions or a link to a text that was written for non experts I would be very thankful

BR

Paul

Is there a way I can get Paraview to make use of my many cores instead of just one?

ParaView is multithread and uses all the cores of your machines when it can, which is not always the case.

That being said, you can also work with parallel server, see here:

https://www.paraview.org/Wiki/Setting_up_a_ParaView_Server

Or maybe even use my Geforce GTX 1060, which should be quite quick?

ParaView is using your GPU for rendering and for computation in some cases depending of your configuration.

But it always takes ages to render all the calculated time steps into pictures to later process them to a video for further engineering in the team.

To get proper help, you will need to be more specific on what is taking ages and how you install/build ParaView.

Thank you for that quick reply.

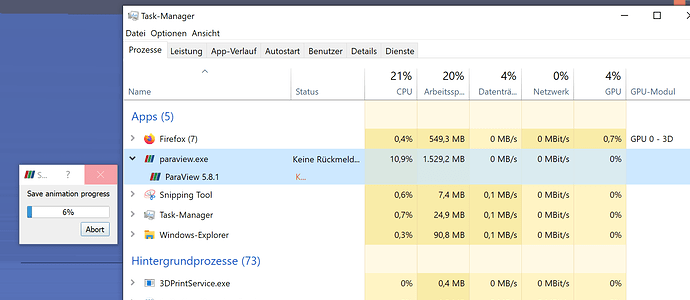

So I just downloaded and installed [ParaView-5.8.1-Windows-Python3.7-msvc2015-64bit.exe] and changed nothing, just started opening the files. I can open and edit the simulation files (.case). But as we have many timesteps and changing the timestep also takes a few minutes, I want to export the pictures/render of each timestep with the animation option (“save animation”). Which works fine, but still takes minutes to render each time step. And when I look into my task manager I can see, paraview only uses one core (about 10%) of my system and no GPU power at all. Therefore I thought there must be room for improvement.

I should have installed the MPI version for multicore calculation maybe?

The simulation is about a droplet of fluid being shot

This is the reading (I/O) that is taking a long time. Is your dataset decomposed or reconstructed ? Do you have a SSD disk or RAID disk ?

I don’t know if my dataset is decomposed or reconstructed. I don’t even know what this means. I am very new to all that. But I do know, I have a SSD and that is not the bottleneck. I added a Screeshot for more details.

I also have installed the MPI version and Microsoft MPI recently, but nothing had changed regarding the speed or usage of my cores.

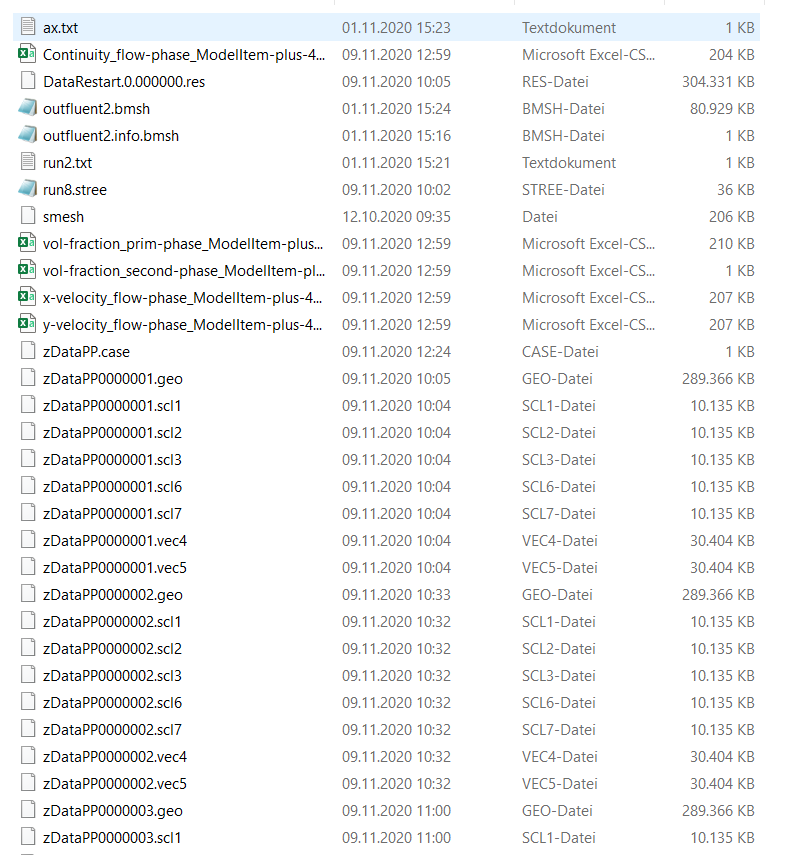

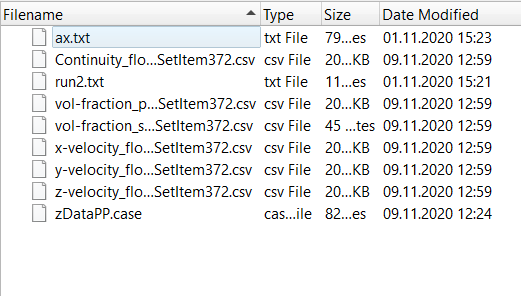

Can you share the content of the folder containing your .foam file as a screenshot of the property panel when you open your .foam file in ParaView after clicking Apply ?

I wrongly assumed you were working with an OpenFOAM case. my bad.

AFAIK, ensight case file should already be able to be read in parallel.

Can you identify the bottleneck when genrating the animation ? Check the “performance” part of the task manager.

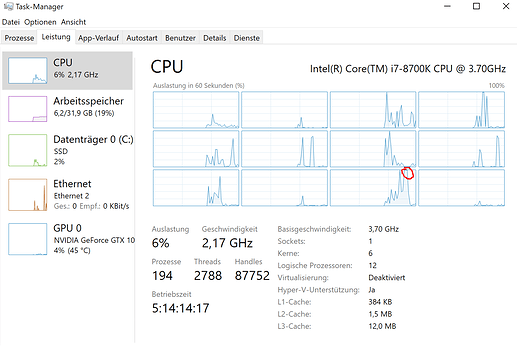

The Bottleneck seems to me that Paraview only uses 1 core and that core is at full power:

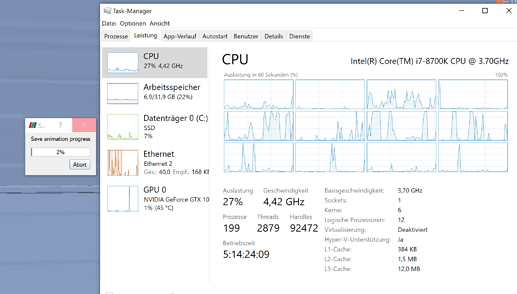

And while having it export a big animation:

No idea?

Nope, one would need to investigate why the ensight reader is inneficient with your file.

Let me try a few guesses.

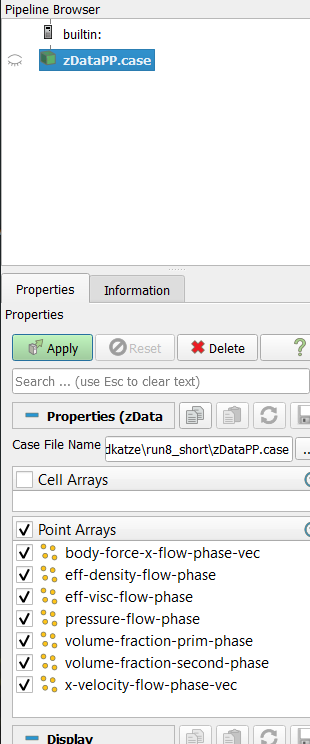

- First off, we need to see your pipeline browser, That is the window upper left.

- How are you rendering? Volume rendering or surface with edges?

- When you open your data, before doing anything else, in the Information tab (next to the Properites tab), how many cells and points do you have?

- How many files in the dataset?

- How did you generate the dataset? It looks like you have spread files, thus multiple cores?

- I am getting clarification on this, but I believe ParaView is NOT multithreaded. The reason is that it is designed to actually run with the back end (the server) on multiple cores of some (or many) nodes - on a cluster or supercomputer. Thus, with the exception of what you are trying to do (I suspect load large data without a cluster), ParaView works well. For your usecase, not so much.

- You can turn on multiple processing in the Edit/ Settings (possibly Advanced) dialog. From my experience, it doesn’t help.

- You are probably either rendering bound (which you suspect) or disk IO bound (which Mathieu mentioned). If you are rendering bound, make sure that your NVidia graphics is enabled. ParaView/ Help/ About/ OpenGL Version. If you are disk IO bound, you are basically running too big of a dadaset on too small of a computer. You need a cluster.

- If you don’t need the variable data, DON’T LOAD IT…

- Try pvbatch. Visualize your movies overnight.

That’s about all I have…

Alan

Thank you Alan ![]()

You can turn on multiple processing in the Edit/ Settings (possibly Advanced) dialog. From my experience, it doesn’t help.

That did the trick, now it is so much faster and finally calculating on more than one core.

Oh, excellent. I will have to look at multiple processing again.

Note that I was incorrect. Many ParaView filters are multithreaded. This is done on a filter by filter basis, if I understand correctly.