Hello,

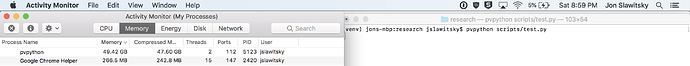

I’m looking to read binary data based on example 13.2.4 in the paraview guide, but I seem to be running into a memory leak with the code I’ve adapted below. When I activate my virtualenv and run the script using pvpython, memory usage grows without bounds until I manually kill the process or the os does it for me. The data set consists of 19 files of 262144 “longs” (times 5 scalar variables) - I don’t think it should be consuming 50GB+ of memory. See attached screenshot for output from activity monitor:

from paraview.simple import (

_DisableFirstRenderCameraReset,

GetRenderView,

ProgrammableSource,

Render,

Show,

)

_DisableFirstRenderCameraReset()

request_info = """

# Activate the virtualenv with necessary packages

activate_this = '/Users/jslawitsky/Desktop/research/venv/bin/activate_this.py'

execfile(activate_this, dict(__file__=activate_this))

import numpy

import yaml

executive = self.GetExecutive()

outInfo = executive.GetOutputInformation(0)

with open("/Users/jslawitsky/Desktop/research/sample/input/config.yaml", "r") as fp:

config = yaml.load(fp)

outInfo.Set(

executive.WHOLE_EXTENT(),

0, config["grid_points.x"] - 1,

0, config["grid_points.y"] - 1,

0, config["grid_points.z"] - 2,

)

outInfo.Set(

vtk.vtkDataObject.SPACING(),

config["domain_size.x"] / config["grid_points.x"],

config["domain_size.y"] / config["grid_points.y"],

config["domain_size.z"] / (config["grid_points.z"] - 1),

)

outInfo.Set(

vtk.vtkDataObject.ORIGIN(),

0,

0,

(config["domain_size.z"] / (config["grid_points.z"] - 1)) / 2,

)

outInfo.Remove(executive.TIME_STEPS())

start = config["output.instantaneous.skip"] * config["time_step_size"]

stop = config["number_of_time_steps"] * config["time_step_size"]

step = config["output.instantaneous.frequency"] * config["time_step_size"]

for timestep in numpy.linspace(start, stop, (stop - start) / step):

outInfo.Append(executive.TIME_STEPS(), timestep)

outInfo.Remove(executive.TIME_RANGE())

outInfo.Append(executive.TIME_RANGE(), config["output.instantaneous.skip"])

outInfo.Append(executive.TIME_RANGE(), config["number_of_time_steps"])

"""

request_data = """

# Activate the virtualenv with necessary packages

activate_this = "/Users/jslawitsky/Desktop/research/venv/bin/activate_this.py"

execfile(activate_this, dict(__file__=activate_this))

import numpy

import os

import yaml

from distutils.util import strtobool

from vtk.numpy_interface import algorithms as algs

from vtk.numpy_interface import dataset_adapter as dsa

executive = self.GetExecutive()

outInfo = executive.GetOutputInformation(0)

with open("/Users/jslawitsky/Desktop/research/sample/input/config.yaml", "r") as fp:

config = yaml.load(fp)

scalars = {"p", "u", "v", "w"}

if strtobool(config["output.instantaneous.write_eddy_viscosity"]):

scalars.add("nu_t")

ts = outInfo.Get(executive.TIME_STEPS())

if outInfo.Has(executive.UPDATE_TIME_STEP()):

t = int(outInfo.Get(executive.UPDATE_TIME_STEP()))

else:

print "no update time available"

t = 0

exts = [executive.UPDATE_EXTENT().Get(outInfo, i) for i in xrange(6)]

nbfiles = (

(config["number_of_time_steps"] - config["output.instantaneous.skip"]) /

config["output.instantaneous.frequency"]

)

dims = [

exts[1] - exts[0] + 1,

exts[3] - exts[2] + 1,

exts[5] - exts[4] + 1,

]

output.SetExtent(exts)

data = numpy.empty(dims[0] * dims[1] * dims[2] * nbfiles, dtype=numpy.dtype('<f'))

points_per_step = (

config["grid_points.x"] *

config["grid_points.y"] *

(config["grid_points.z"] - 1)

)

dir_name = "/Users/jslawitsky/Desktop/research/sample/output/instantaneous-fields/"

def AddScalarVariable(nbfiles, varname):

for i in xrange(nbfiles):

# example file format: 'dir_name/p-001.bin'

fname = "{}{}-{}.bin".format(dir_name, varname, str(i).zfill(3))

with open(fname, "rb") as fp:

fp.seek(0)

begin = points_per_step * i

end = points_per_step * (i + 1)

data[begin:end] = numpy.fromfile(fp, dtype=numpy.dtype("<f"), count=-1, sep="")

output.PointData.append(data, varname)

for varname in scalars:

AddScalarVariable(nbfiles, varname)

output.GetInformation().Set(output.DATA_TIME_STEP(), t)

"""

View1 = GetRenderView()

pSource1 = ProgrammableSource()

pSource1.OutputDataSetType = 'vtkImageData'

pSource1.ScriptRequestInformation = request_info

pSource1.Script = request_data

pSource1.UpdatePipelineInformation()

Show(pSource1, View1)

Render()

When I comment out output.PointData.append(data, varname), the code runs to completion, but nothing is displayed (obviously because I haven’t appended any data).

Any insights would be greatly appreciated.

Thanks,

Jon