Dear all,

I am using a post process script executed in the HPC of my institution for generating the streaklines of my simulation carried out in a multiblock mesh. I am calling the pvbatch as :

mpiexec -np 6 pvbatch --force-offscreen-rendering $my_script.py

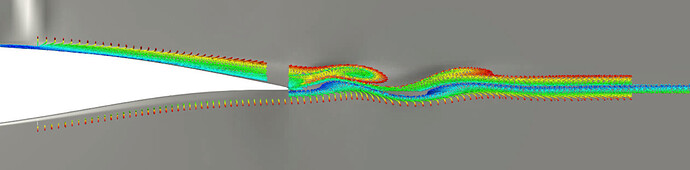

When I run this locally on my laptop (for a small amount of timesteps) it works completely fine but I have been doing some tests on the HPC and as you can see in the attached screenshot, there are missing blocks in the rendering. It is worth to mention that they appear and dissappear and it is not always the same blocks that do that. It doesn’t seem a problem of the particle tracer filter because it tracks the particles motion successfully. It is my first time using this kind of post-process technique so I am not very experienced with this kind of errors when there is not a critical failure in the script.

Thanks in advance!

I don’t know offhand what the issue is, but I wouldn’t discount problems with the particle tracer filter. From your picture, it looks like you have lots of little traces, so the traces might be failing to bridge across partitions in your job. This could be caused by problems with ghost cells in your data. If you have unstructured data, you might try running the Ghost Cells Generator filter.

My suggestion was just a guess, but yeah, that is worth a try.

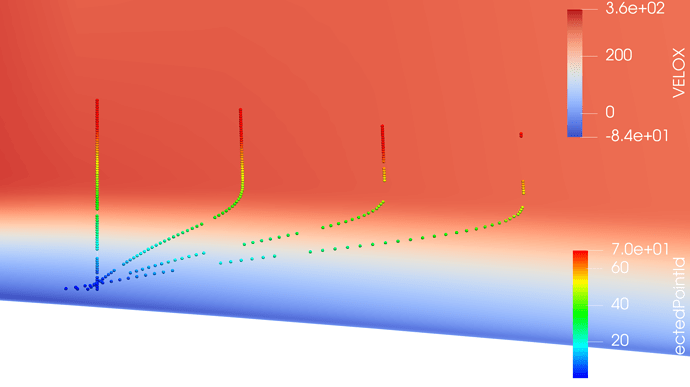

Well, this leads to a new issue : In my case, I can only apply the “ghost cells” filter after I use the “merge blocks” filter. BUT when I use the particle tracer after the “merge blocks” filter, there are missing seed points in the streaklines that change each time-step :

If calling Merge Blocks then Generate Ghost Cells works (not considering the seed issues), but just calling Merge Blocks does not, then this certainly sounds like issue is that your data is not defining ghost cells correctly. Ultimately, the solution relies on getting correct ghost cells.

ParaView has limited capabilities to generate these for you. It’s always better if the data come with ghost cells. You never said what format your data is in. It sounds like the reader is repartitioning the data based on the number of MPI process that you have. Perhaps it is not loading an appropriate layer of ghost cells. If your data has regular structure, it could be leaving cell gaps if it is only partitioning the points.

My data is exported as .plt and my solver leaves an extra point between the cells at the blocks boundaries I use the “VisItTecplotBinaryReader” in order to load the data and seems to work completely fine. I don’t know if this will have any impact but I use the video output format .ogv with a resolution of 1500x750.

I am very curious about this because this afternoon I downloaded the data to my laptop and ran the script (it took a while to run it completely) and the output was totally fine

I have been doing a quite intense testing these days and I found out that the missing blocks are NOT due to :

- video output format

- scene lightning

- merge blocks filter

- frame rate

- video quality

- particles rendered as points or spheres

- video resolution

It worked fine when I showed a velocity contour instead of a density gradients contours (the ones showed in my previous images), which made me think to declare the gradient contour more upstream in the pipeline. Now my animation is being “correctly” rendered in the HPC with minnor lightning issues in some blocks. Am I having this issues because I am rendering the animation with CPU and not with GPU? Is there any recommended modules to load when using paraview in pvbatch mode?

Based on everything you have described, it still sounds like the issue is with proper ghost cell information in your data, which is necessary when running in MPI parallel but generally not a laptop. But it sounds like you got that working OK.

The “minor lighting issues” could be more than one thing. They could be lighting issues if you are doing a form of rendering that has global lighting. Or it could be ghost cell problems if the issue is something like normal generation.

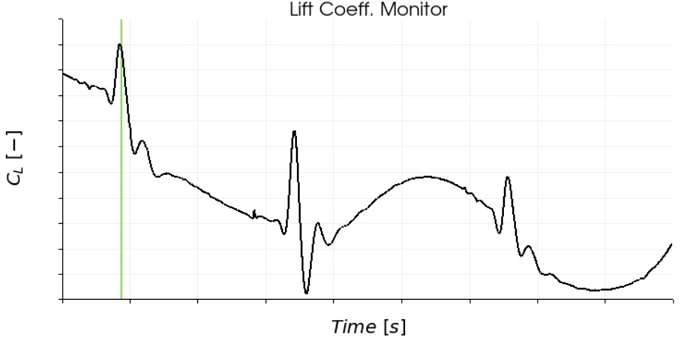

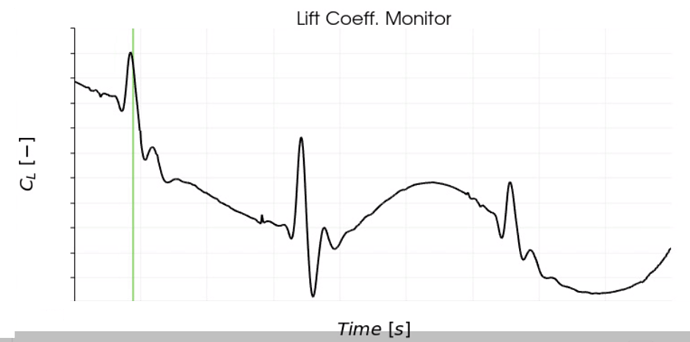

I have one more question regarding offscreen-rendering. Is there any control to define the line resolution in a graph plot? The image below has 900 points and the animation output has a very coarse line ploted on it instead of a smooth line. It doesn’t seem that the image resolution has any impact because the axis titles have a correct resolution, it is just the plotted line itself. I have attached 2 images of the same animation rendered in a HPC and in a laptop :

Rendered in HPC (coarse line)

Rendered in laptop (fine line)