Hi Everyone,

I have now easier access to the workstation.

Windows 10, RTX 2080 card, driver version 430.86 (not the latest), paraview 5.7.0-RC2.

I use a few custom materials that seem to work well with ospray:

"water" : {

"type": "Glass",

"doubles" : {

"attenuationColor" : [1.0, 1.0, 1.0],

"attenuationDistance" : [1.0],

"eta" : [1.33]

}

},

"WaterLightBlue": {

"type": "Principled",

"doubles" : {

"thin" : [1.0],

"ior" : [1.33],

"transmissionColor" : [0.22, 0.34, 0.47],

"transmission" : [1.0],

"thickness" : [1.0],

"specular" : [1.0],

"opacity" : [0.6],

"diffuse" : [1.0],

"backlight" : [0.0],

"roughness" : [0.1]

}

},

"WaterDarkBlue": {

"type": "Principled",

"doubles" : {

"thin" : [1.0],

"ior" : [1.33],

"transmissionColor" : [0.22, 0.34, 0.47],

"transmission" : [1.0],

"thickness" : [1.0],

"specular" : [1.0],

"opacity" : [0.85],

"diffuse" : [1.0],

"backlight" : [0.0],

"roughness" : [0.1]

}

},

"YellowShip": {

"type": "Principled",

"doubles" : {

"baseColor" : [1.0, 1.0, 0.0],

"specular" : [0.5],

"opacity" : [1.0],

"diffuse" : [0.5],

"roughness" : [0.5]

}

}

My goal is to render a Ship with the free-surface. (YellowShip for the ship material, WaterDarkBlue for the free-surface)

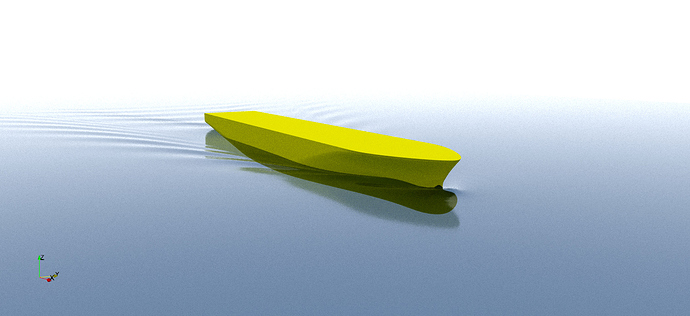

With OSpray:

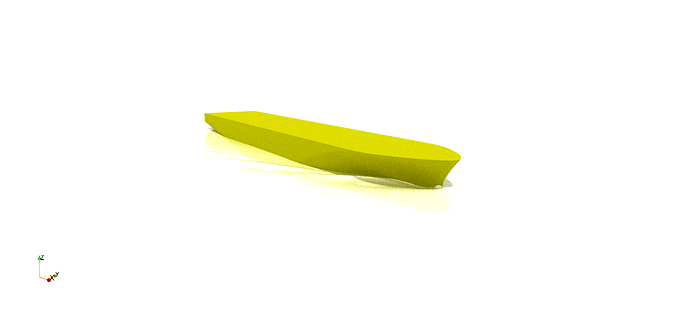

With OptiX

There seem to be something not working properly with the materials!

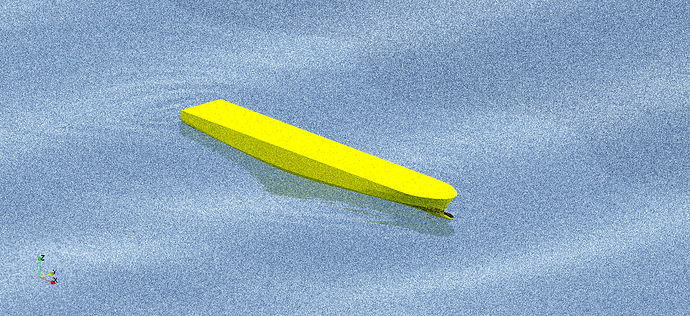

Another attempt: (YellowShip for the ship, WaterDarkBlue for a box which surrounds the whole domain, and “water” for the free-surface)

OSpray:

OptiX:

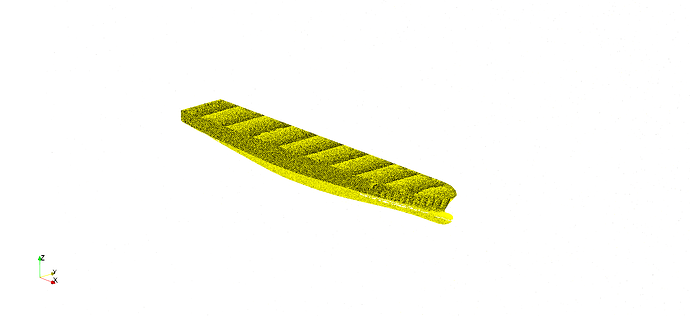

I also tried without using materials, but that does not seems very successful either.

So, does anybody has a clue of what’s going on ?

One thing I do see is the CPU usage reduction, and quicker time to image when using OptiX, but the result isn’t really up to my expectations…