Dear PARAVIEW community,

greetings. This is Marco, I am new here, recently started used this wonderful tool. I hope this email finds you all well.

I have a question regarding the creation of time-varying auxiliary curvilinear coordinates, that I’d like to append to a netcdf files. For pre-processing, I am using Python.

As an example, I am starting from the available netcdf inside the xarray package: https://xesmf.readthedocs.io/en/v0.1.2/Curvilinear_grid.html

When printing the array in Python (headers), I get:

Dimensions: (time: 36, x: 275, y: 205)

Coordinates: * time (time) datetime64[ns] 1980-09-16T12:00:00 1980-10-17 ...

xc (y, x) float64 189.2 189.4 189.6 189.7 189.9 190.1 190.2 190.4 ...

yc (y, x) float64 16.53 16.78 17.02 17.27 17.51 17.76 18.0 18.25 ...

Dimensions without coordinates: x, y

Data variables: Tair (time, y, x) float64 nan nan nan nan nan nan nan nan nan nan ...

In Python, I managed to mimic the structure of the file linked above. The field I am encapsulating into the newly-created netcdf file (in the example above: Tair) is timevarying but, unlike the example linked above, my xc, yc coordinates are timevarying too.

Instead of y, I am actually working over the vertical coordinate (x,z, time). So, when coding in python I added to zc (or yc in the original example) another dimension: time. Inside my for loop, when assigning Tair values for each timestep, I impose new yc coordinates too:

ds = xr.Dataset(

{

q2merge: ([ "time", "z", "x"], sal), #sal = salinity

},

coords={

"time": write_t_sereis(newt), #custom function to convert to time

"xc": (["time","z","x"], np.empty([nt, nz+1, nx],dtype='float32')),

"yc": (["time","z","x"], np.empty([nt, nz+1, nx],dtype='float32')), #------- as in CHapter 6.2. of http://cfconventions.org/cf-conventions/cf-conventions.html#discrete-axis

},

)#

#Start a time-marching loop and, at each time-step, append:

# - new vertical coordinates z

# - scalar field at timestep t

c= 0 # some counter

for thatStep in T:

yc[c,:,:] = Function_to_extract_zcoords(thatStep) # yc = yc(time, z, x)

# .....

# Add scalar field

ds[q2merge][c,:,:]=Function_to_extract_ScalarField(thatStep)

# .....

c = c +1

#Outside the loop, once done, append metadata:

ds['xc'].attrs['axis']="X"

ds['xc'].attrs['long_name']="longitude of grid cell center"

ds['xc'].attrs['units']="degrees_east"

#Should be vertical, Z, but it seems that PARAVIEW has a bug (see below)

ds['yc'].attrs['long_name']="latitude of grid cell center"

ds['yc'].attrs['units']="degrees_north"

ds.attrs['coordinates']="yc xc"

ds.close() #close the door when you leave

fname= "{}.nc".format(q2merge) #some meaningful name

ds.to_netcdf(fname, unlimited_dims={'time':True}) #flag as unlimited

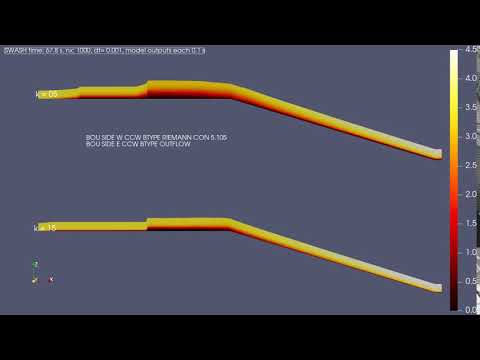

However when I try post process that result (all t-steps in one file) in PV and visualize the results, vertical coordinates are not varying (here I call them “y”, but they are actually vertical, as PV seems to have a bug and fails in recognizing it. For the record, an issue was already posted: https://gitlab.kitware.com/vtk/vtk/-/issues/18035 ).

I tried saving all timesteps separately. In this case, I see that each time-step vertical structures preserves the desired structures (height of vertical levels), meaning that I can see the actual wire-frames fluctuating. However, when merging manually (with cdo or with nco), everything gets lost.

My questions:

-

does xarray allows time-varying auxiliary coordinates?

-

if so am I doing it correctly?

-

is the problem in my python code? or is PV not (yet?) able to understand time-varying coordinates?

If needed, I’d be happy to share my Python code as well as individual/merged netcdf files.

Thank you so much for the time you will dedicating to this.