Hi Connor,

I had forgotten to reply here preivously, sorry about that. No need to create another parallel post for the same topic though.

Getting back on my previous answer :

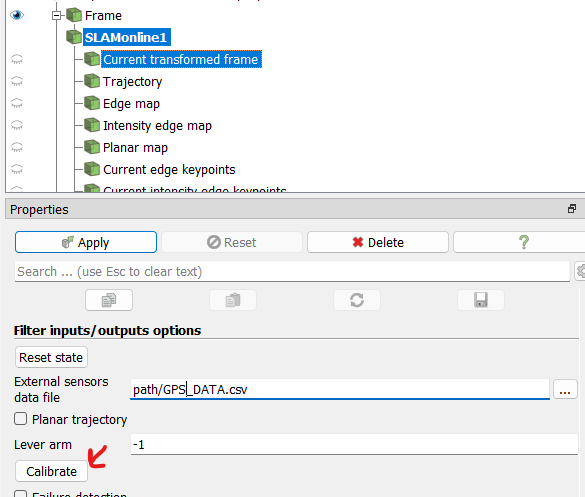

- You need to give a calibration matrix to transform the poses to the LiDAR ( otherwise you are sending the algorithm an information of movement that goes in the wrong direction )

- You need to have the data synchronized, or the algorithm cannot guess which value to take for each lidar frame ( and each point within the frame )

Responding from your other post :

I have confirmed that my external sensor .csv data is correct and properly formatted (see attached.) What is strange to me, is that each time I run SLAM with the same external sensor data, the trajectory turns out differently! This implies to me that my external sensor data is not determining the trajectory as it should

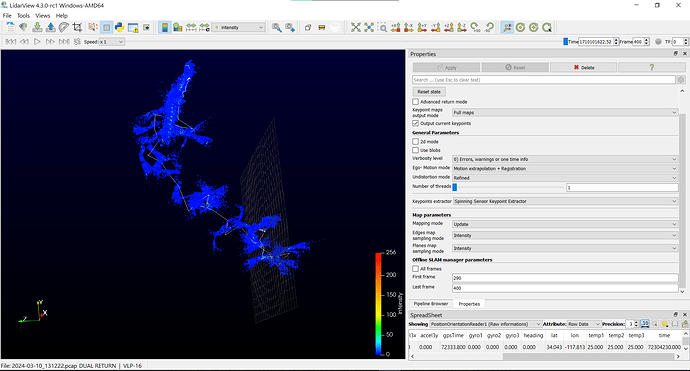

Which player setting did you use in LidarView ? By default, it is set to *1 that will emulate real time, which can lead to non deterministic outcome ( as some frames are skipped if the process takes longer than real time, and which ones depends on how your PC schedules this process )

=> You should use All Frames setting to make sure all frames are played

Everything considered, my professor and I have become rather frustrated with what seems like a straightforward task: using GPS/IMU data from a drone to aggregate LiDAR frames. We have the position and orientation data, so why is it so difficult to integrate this data in LidarView? It seems like a serious product flaw…

Without calibration and time sync, this data is not usable. We have some tools in LidarView to calibrate GPS with LiDAR using both LiDAR estimated trajectory and GPS data. But this needs to be done in conditions where LiDAR SLAM is sufficient. This is available here

Some explanation here

At this point, I’m not sure we would recommend Velodyne products to other researchers trying to capture aerial LiDAR data, but hopefully this problem can still be resolved.

Concluding on Velodyne products based on the results you get from an external software to which you give incomplete data seems like a hasty conclusion to me…

Regarding the transform of latitude / longitude into x/y coordinates.

The reference library is libproj

This is handled in LidarView in this part when GPS data are provided along LiDAR data in pcap files